Some concepts that look similar may lead to confusion, especially when given their abbreviations. This article will try to distinguish OLS, GLS, WLS, LARS, ALS

1. OLS - Ordinary Least Square

- No Comment.

2. GLS - Generalized Least Square

Here we’re not assuming errors are constant and uncorrelated, instead:

Find S as the triangular matrix using the Choleski decomposition.(Square root of error covariance matrix), and reconstruct the regression function, to get constant & uncorrelated error variance.

3. WLS - Weighted Least Square

A special case of GLS, errors are uncorrelated but have non-equal variance.

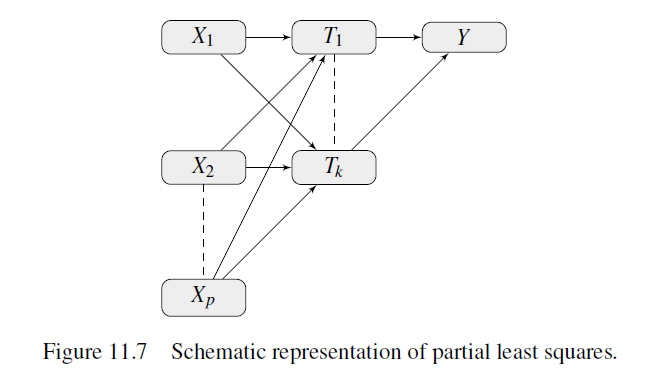

4. PLS - Partial Least Square

Same idea as PCR(Principle Component Regression), the difference is that, PLS also choose what the component is to predict Y, just as ordinary linear regression.

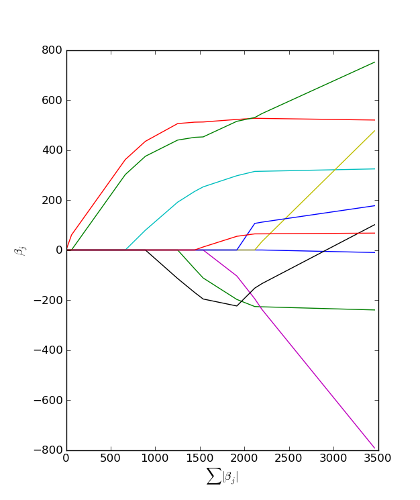

5. LARS - Least Angle regression

It’s an algorithm for computing linear regression with L1 regularization(Lasso).

Big idea: Move coef along the most correlated feature until another feature becomes more correlated.

- Start with all coef 0

- Find feature x_i most correlated with y

- Increase corresponding b_i, take along residual r. Stop when some x_j has much correlation with r as x_i

- Increase (b_i, b_j) in their joint least square direction, until some other x_m has much correlation with r.

- If a non-zero coef hits 0, remove it from the active set of features and recompute the joint direction.

- Continue until all features are in the model.

Generally speaking, it is a forward selection algorithm implemented in Lasso mode. I’ll talk about Ridge, Lasso and ElasticNet regularizations in another article.

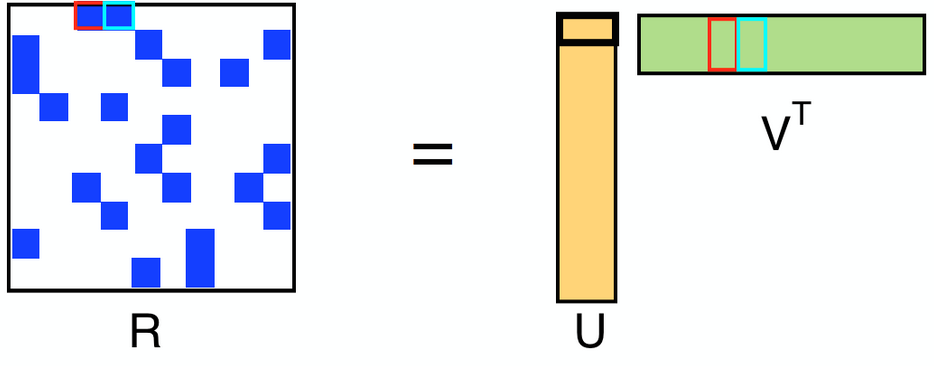

6. ALS - Alternative Least Square

Used in collaborative filtering, powerful technique in building recommendation systems.

Here we want UV to be as close to R as possible, since U, V are too low rank, a perfect solution will be impossible. But it’s actually a good thing, which will reduce the iteration rounds of ALS.

General idea of ALS: Fix one matrix to optimize another, and do iterations. It’s just like EM algorithm, our objective function here is the loss(difference) between LHS and RHS.

I’ll talk about collaborative filtering along with recommendation system and RSA next time.